A Survey of Large Language Models - Cheatsheet

The following is an attempt to condense 58 pages of excellent information on Large Language Models (LLM) (Zhao et al. (2023), A Survey of Large Language Models) into a cheat sheet webpage. The paper is a comprehensive survey of large language models (LLMs) and their applications. All of the following information can be found in the original paper, and I highly recommend reading it in full as some details have been excluded here for brevity. Some hyperlinks have been provided to link original model papers or other relevant resources.

Introduction

"Language is a prominent ability in human beings to express and communicate, which develops in early childhood and evolves over a lifetime. Machines, however, cannot naturally grasp the abilities of understanding and communicating in the form of human language, unless equipped with powerful artificial intelligence (AI) algorithms. It has been a longstanding research challenge to achieve this goal, to enable machines to read, write, and communicate like humans."- Statistical models (SLM): 1990s

- Markov, n-gram language models

- Neural language models (NLM): 2000s

- RNNs (e.g. LSTM, GRU)

- Distributed representation of words (Bengio et al.)

- Pre-trained language models (PLM): 2018

- Large language models (LLM): 2022

- "It is mysterious why emergent abilities occur in LLMs, instead of smaller PLMs. As a more general issue, there lacks a deep, detailed investigation of the key factors that contribute to the superior abilities of LLMs."

- "It is difficult for the research community to train capable LLMs. Due to the huge demand of computation resources, it is very costly to carry out repetitive, ablating studies for investigating the effect of various strategies for training LLMs."

Overview

- "Typically, large language models (LLMs) refer to Transformer language models that contain hundreds of billions (or more) of parameters, which are trained on massive text data."

- "Extensive research has shown that scaling can largely improve the model capacity of LLMs" (e.g. KM scaling law, Chinchilla scaling law)

- "Emergent abilities" arise in large models that are not present in smaller models (i.e. in-context learning (ICL), instruction following, step-by-step reasoning, etc.)

- Some techniques have proved beneficial in the evolution of LLMs (i.e. pre-training, fine-tuning, prompt engineering, etc.)

Due to the excellent capacity in communicating with humans, ChatGPT has ignited the excitement of the AI community since its release. The basic principle underlying GPT models is to compress the world knowledge into the decoder-only Transformer model by language modeling, such that it can recover (or memorize) the semantics of world knowledge and serve as a general-purpose task solver.

GPT-2 sought to perform tasks via unsupervised language modeling without explicit fine-tuning using labeled data. However, it was found that GPT-2 was not as capable as its supervised fine-tuned counterparts due to its relatively small size. Ultimately, GPT-3 empirically proved the power of scaling (1.5B parameters to 175B), which demonstrated emergent abilities (in-context learning)

GPT-3 formed the foundation for further enhancements thanks to fine-tuning on code data, human alignment through reinforced learning (RL), and training on conversational data to generate ChatGPT. GPT-4 served as a further benchmark in capability with even greater performance on many evaluation tasks.

Resources of LLMs

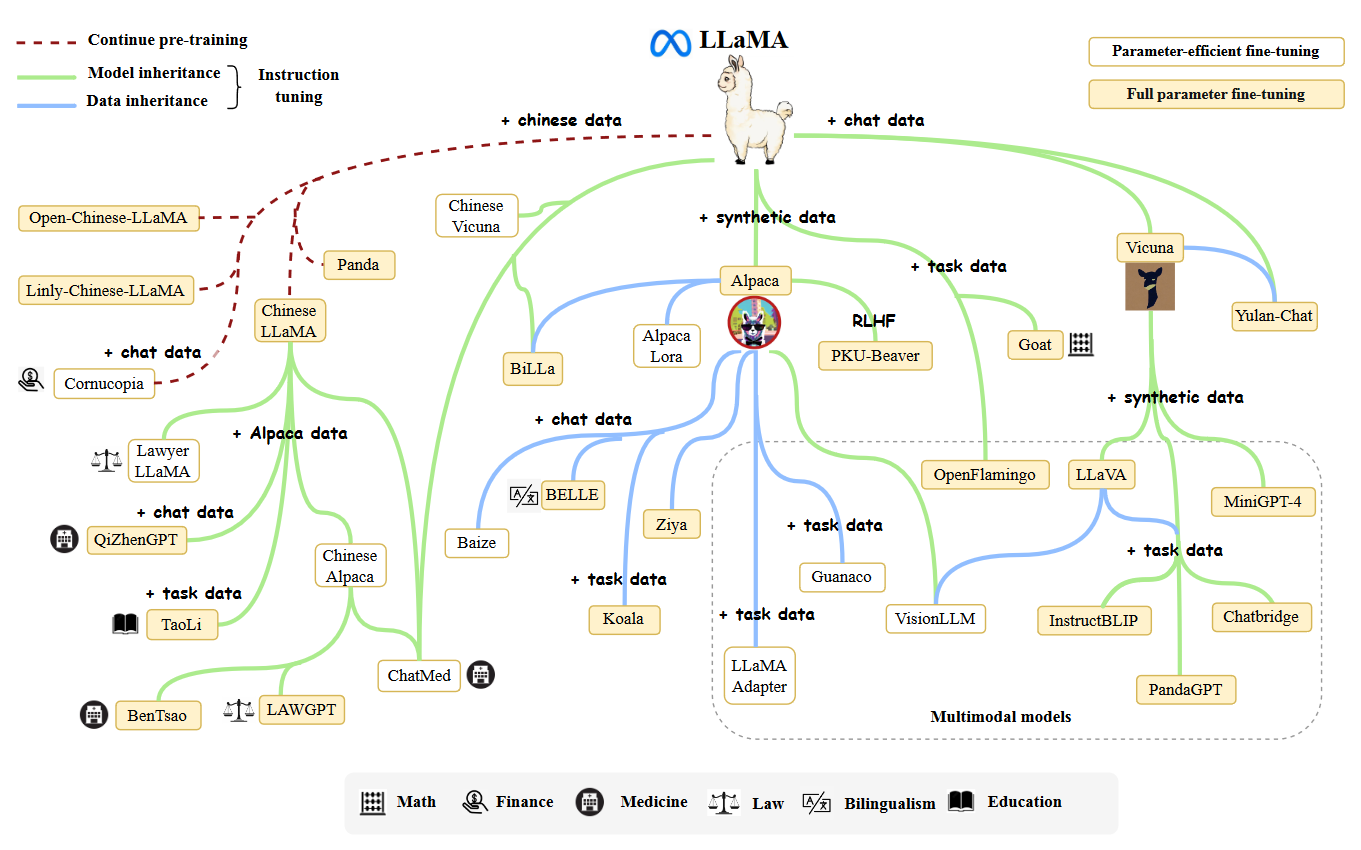

"A feasible way is to learn experiences from existing LLMs and reuse publicly available resources for incremental development or experimental study."LLaMA models have achieved very excellent performance on various open benchmarks, which have become the most popular open language models thus far...In particular, instruction tuning LLaMA has become a major approach to developing customized or specialized models, due to the relatively low computational costs."

"Instead of directly using the model copies, APIs provide a more convenient way for common users to use LLMs, without the need of running the model locally." The GPT-series models are the leading example of such a service.

"In contrast to earlier PLMs, LLMs which consist of a significantly larger number of parameters require a higher volume of training data that covers a broad range of content. For this need, there are increasingly more accessible training datasets that have been released for research."

- BookCorpus

- Project Gutenburg

- Books1 and Books2

- GitHub

- StackOverflow

- BigQuery

Pre-training

"Pre-training establishes the basis of the abilities of LLMs. By pre-training on large-scale corpora, LLMs can acquire essential language understanding and generation skills. In this process, the scale and quality of the pre-training corpus are critical for LLMs to attain powerful capabilities. Furthermore, to effectively pre-train LLMs, model architectures, acceleration methods, and optimization techniques need to be well designed."- General Text Data (i.e. Webpages, conversation text, books)

- Specialized Text Data (i.e. multilingual text, scientific text, code)

- Mixture of sources. Source diversity aids model generalization capability

- Amount of pre-training data. More data typically means more performance

- Quality of pre-training data. Low-quality training corpora may hurt performance

- LayerNorm

- RMSNorm

- DeepNorm

- Pre-LN

- Post-LN

- Sandwich-LN

- GeLU

- GLU

- Absolute

- Relative

- Rotary

- ALiBi

- Full attention

- Sparse attention

- Multi-query attention

- FlashAttention

- Language Modeling. "Given a sequence of tokens x = {x1, . . . , xn}, the LM task aims to autoregressively predict the target tokens xi based on the preceding tokens x < i in a sequence."

- Denoising Autoencoding. The model attempts to recover randomly corrupted text.

- Mixture-of-Denoisers. aka UL2 loss

- "Most LLMs are developed based on the causal decoder architecture, and there still lacks a theoretical analysis on its advantage over the other alternatives."

- "One of the main drawbacks of Transformer-based language models is the context length is limited due to the involved quadratic computational costs in both time and memory. Meanwhile, there is an increasing demand for LLM applications with long context windows, such as in PDF processing and story writing."

- Batch Training. Typically a large number, but recently becomes dynamic

- Learning Rate. Warm-up and decay strategies

- Optimizer. e.g., Adam, AdamW, Adafactor

- Stabilizing the Training. e.g., weight decay, gradient clipping

- 3D Parallelism. i.e., data parallelism, pipeline parallelism, tensor parallelism

- ZeRO. Memory efficiency

- Mixed Precision Training i.e., FP16, BF16. Memory efficiency

Adaptation of LLMs

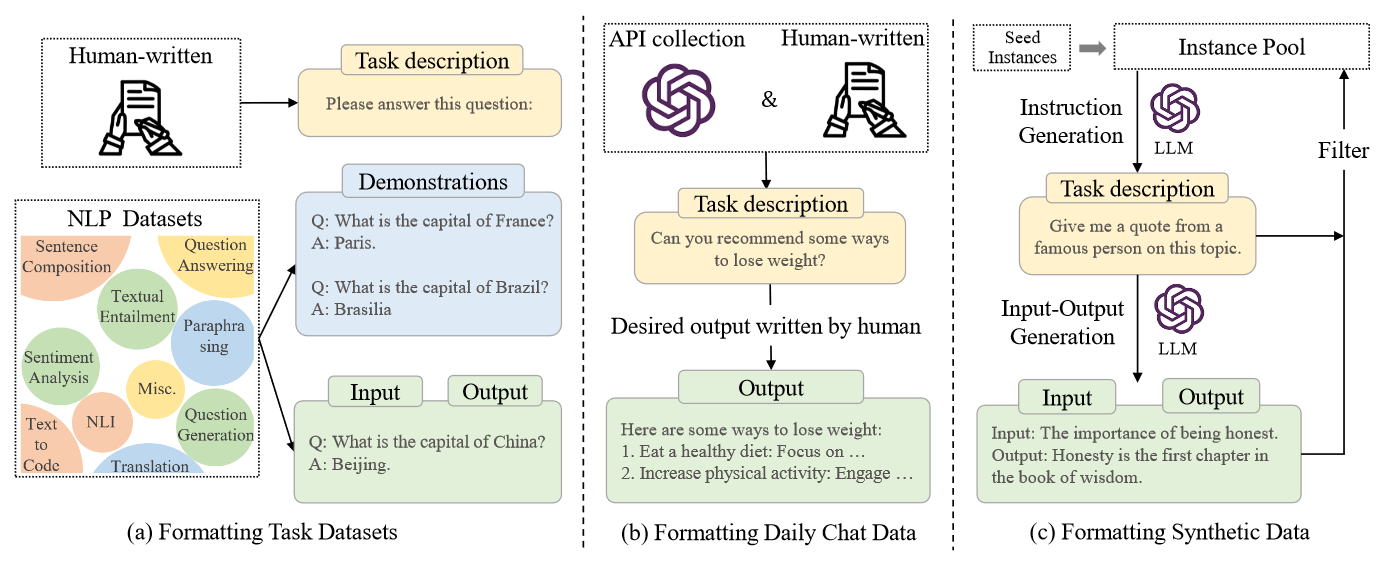

"After pre-training, LLMs can acquire the general abilities for solving various tasks. However, an increasing number of studies have shown that LLM's abilities can be further adapted according to specific goals.""The approach to fine-tuning pre-trained LLMs on a collection of formatted instances in the form of natural language, which is highly related to supervised fine-tuning and multi-task prompted training. In order to perform instruction tuning, we first need to collect or construct instruction-formatted instances. Then, we employ these formatted instances to fine-tune LLMs in a supervised learning way (e.g., training with the sequence-to-sequence loss). After instruction tuning, LLMs can demonstrate superior abilities to generalize to unseen tasks, even in a multilingual setting."

Instruction tuning can provide performance improvement despite a moderate number of training instruction instances. Furthermore, instruction tuning can help task generalize models thanks to an enhanced ability to follow human instructions. Lastly, such a technique can be used to develop specialized models for domain specific tasks.

Experiment Findings- "Task-formatted instructions are more proper for the QA setting, but may not be useful for the chat setting."

- "A mixture of different kinds of instructions are very helpful in improving the comprehensive abilities of LLMs."

- "Enhancing the complexity and diversity of instructions leads to an improved model performance."

- "Simply increasing the number of instructions may not be that useful, and balancing the difficulty is not always helpful."

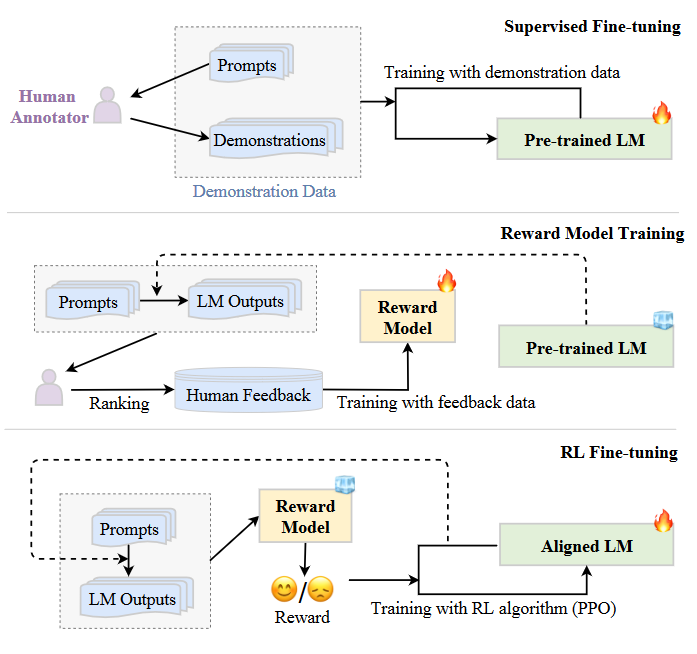

"LLMs have shown remarkable capabilities in a wide range of NLP tasks. However, these models may sometimes exhibit unintended behaviors, e.g., fabricating false information, pursuing inaccurate objectives, and producing harmful, misleading, and biased expressions."

Models should seek to be helpful, honest, and harmless.

- Appropriate selection of human labelers for data annotation is essential

- Human Feedback Collection:

- Ranking-based

- Question-based

- Rule-based

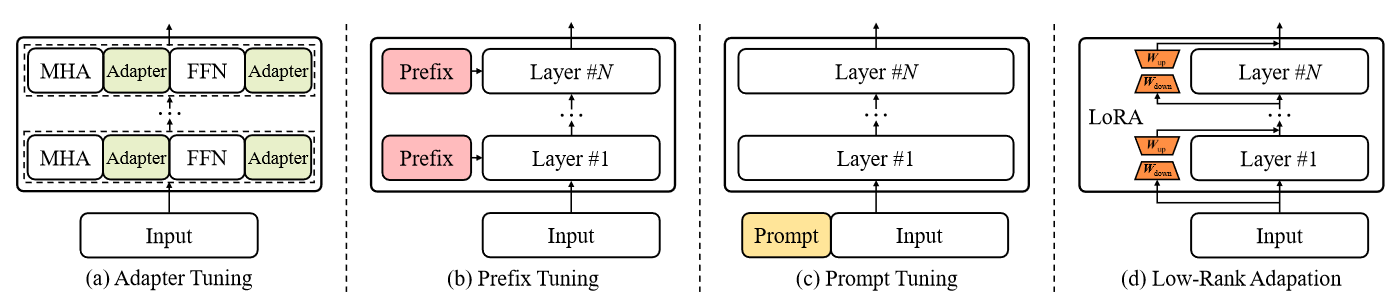

"Since LLMs consist of a huge amount of model parameters, it would be costly to perform the full parameter tuning...parameter-efficient fine-tuning has been an important topic that aims to reduce the number of trainable parameters while retaining a good performance as possible."

- Post-training quantization

- Quantization-aware training

- "INT8 weight quantization can often yield very good results on LLMs, while the performance of lower precision weight quantization depends on specific methods."

- "Activations are more difficult to be quantized than weights."

- "Efficient fine-tuning enhanced quantization is a good option to enhance the performance of quantized LLMs."

Utilization

"After pre-training or adaptation tuning, a major approach to using LLMs is to design suitable prompting strategies for solving various tasks."

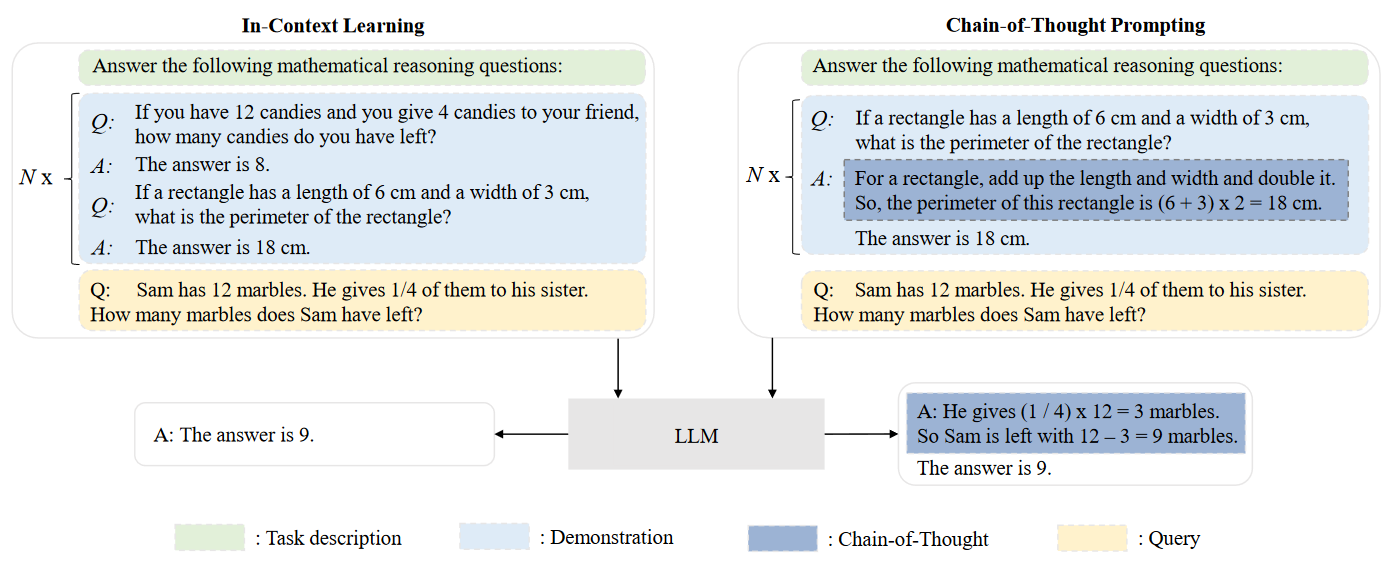

- Demonstration selection. "The performance of ICL tends to have a large variance with different demonstration examples, so it is important to select a subset of examples that can effectively leverage the ICL capability of LLMs."

- Demonstration format. "After selecting task examples, the next step is to integrate and format them into a natural language prompt for LLMs."

- Demonstration order. "LLMs are shown to sometimes suffer from the recency bias, i.e., they are prone to repeat answers that are near the end of demonstrations. Thus, it is important to arrange demonstrations (i.e., task examples) in a reasonable order."

- "ICL can be theoretically explained as the product of pre-training on documents that exhibit long-range coherence.

- "Theoretically, when scaling parameters and data, LLMs based on next-word prediction can emerge the ability of ICL by learning from the compositional structure (e.g., how words and phrases are combined to form larger linguistic units like sentences) present in language data."

- Task recognition. "In the first way, LLMs recognize the task from demonstrations and utilize the prior knowledge obtained from pre-training to solve new test tasks."

- Task learning. "In the second way, LLMs learn new tasks unseen in the pre-training stage only through demonstrations."

"Chain-of-Thought (CoT) is an improved prompting strategy to boost the performance of LLMs on complex reasoning tasks, such as arithmetic reasoning, commonsense reasoning, and symbolic reasoning. Instead of simply constructing the prompts with input-output pairs as in ICL, CoT incorporates intermediate reasoning steps that can lead to the final output into the prompts."

When CoT works for LLMs?"Since CoT is an emergent ability, it only has a positive effect on sufficiently large models (typically containing 10B or more parameters) but not on small models."

The source of CoT ability."Regarding the source of CoT capability, it is widely hypothesized that it can be attributed to training on code since models trained on it show a strong reasoning ability. Intuitively, code data is well organized with algorithmic logic and programming flow, which may be useful to improve the reasoning performance of LLMs. However, this hypothesis still lacks publicly reported evidence of ablation experiments (with and without training on code)."

Capacity Evaluation

"To examine the effectiveness and superiority of LLMs, a surge of tasks and benchmarks have been proposed for conducting empirical ability evaluation and analysis."- Language Modeling. i.e. next token prediction

- Conditional Text Generation. e.g. machine translation, text summarization, and question answering

- Code Synthesis. Code is uniquely right or wrong in execution

- Unreliable generation evaluation. "LLMs have been capable of generating texts with a comparable quality to human-written texts, which however might be underestimated by automatic reference-based metrics. As an alternative evaluation approach, LLMs can serve as language generation evaluators to evaluate a single text, compare multiple candidates, and improve existing metrics. However, this evaluation approach still needs more inspections and examinations in real-world tasks."

- Underperforming specialized generation. "LLMs may fall short in mastering generation tasks that require domain-specific knowledge or generating structured data. It is non-trivial to inject specialized knowledge into LLMs, meanwhile maintaining the original abilities of LLMs."

- Closed-Book QA. Tests factual knowledge without using external resources

- Open-Book QA. Tests factual knowledge from external knowledge bases or document collections

- Knowledge Completion. Completing or predicting missing parts of knowledge units (e.g. knowledge triples such as (Paris, Capital-Of, France))

- Hallucination. "LLMs are prone to generate untruthful information that either conflicts with the existing source or cannot be verified by the available source. Even the most powerful LLMs such as ChatGPT face great challenges in migrating the hallucinations the generated texts. This issue can be partially alleviated by special approaches such as alignment tuning and tool utilization."

- Knowledge recency. "The parametric knowledge of LLMs is hard to be updated in a timely manner. Augmenting LLMs with external knowledge sources is a practical approach to tackling the issue. However, how to effectively update knowledge within LLMs remains an open research problem."

- Knowledge Reasoning. "Rely on logical relations and evidence about factual knowledge to answer the given question."

- Symbolic Reasoning. "Focus on manipulating the symbols in a formal rule setting to fulfill some specific goal, where the operations and rules may have never been seen by LLMs during pre-training."

- Mathematical Reasoning. "Comprehensively utilize mathematical knowledge, logic, and computation for solving problems or generating proof statements."

- Reasoning inconsistency. "LLMs may generate the correct answer following an invalid reasoning path, or produce a wrong answer after a correct reasoning process, leading to inconsistency between the derived answer and the reasoning process. The issue can be alleviated by fine-tuning LLMs with process-level feedback, using an ensemble of diverse reasoning paths, and refining the reasoning process with self-reflection or external feedback."

- Numerical computation. "LLMs face difficulties in numerical computation, especially for the symbols that are seldom encountered during pre-training. In addition to using mathematical tools, tokenizing digits into individual tokens is also an effective design choice for improving the arithmetic ability of LLMs."

- Human alignment. "It is desired that LLMs could well conform to human values and needs, i.e., human alignment, which is a key ability for the broad use of LLMs in real-world applications. To evaluate this ability, existing studies consider multiple criteria for human alignment, such as helpfulness, honesty, and safety."

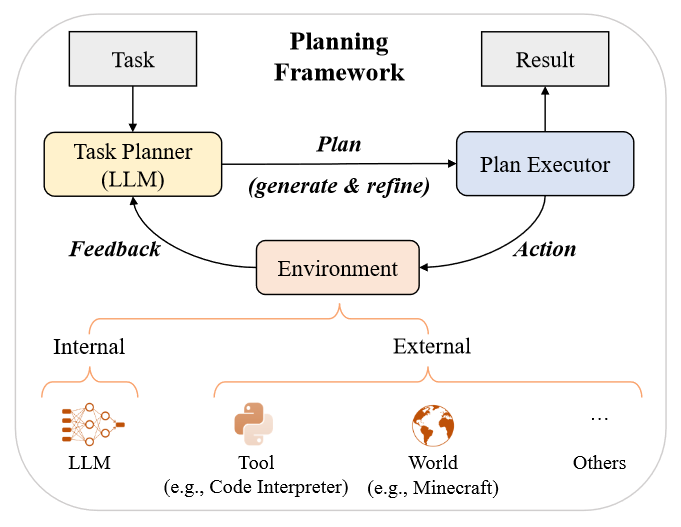

- Interaction with external environment. "LLMs have the ability to receive feedback from the external environment and perform actions according to the behavior instruction, e.g., generating action plans in natural language to manipulate agents."

- Tool manipulation. "When solving complex problems, LLMs can turn to external tools if they determine it is necessary. By encapsulating available tools with API calls, existing work has involved a variety of external tools, e.g., search engine, calculator, and compiler, to enhance the performance of LLMs on several specific tasks."

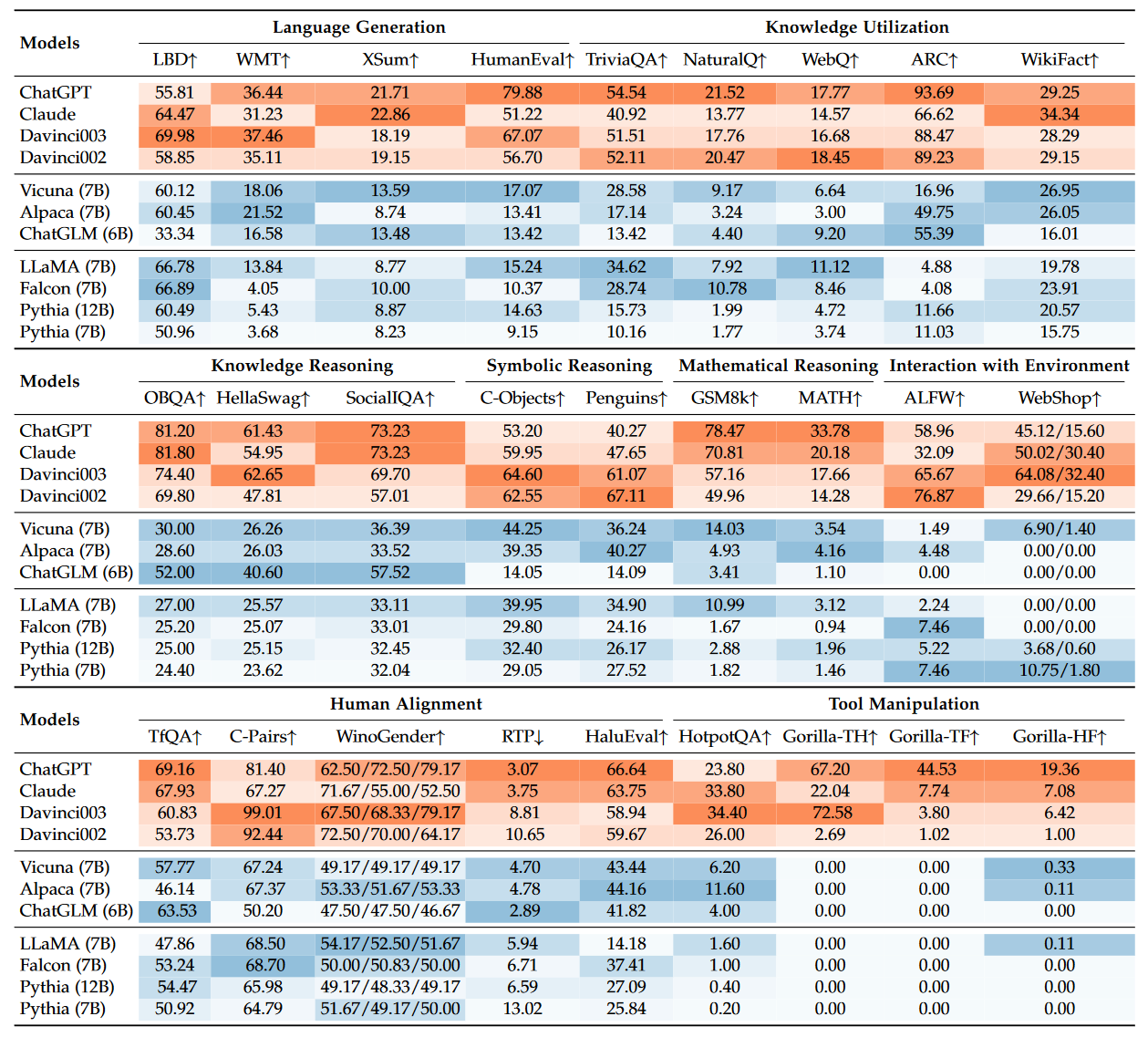

- "These four closed-source models achieve promising results as general-purpose task solvers, in which ChatGPT mostly performs the best."

- "All the comparison models perform not well on very difficult reasoning tasks."

- "Instruction-tuned models mostly perform better than the base models."

- "These small-sized open-source models perform not well on mathematical reasoning, interaction with environment, and tool manipulation tasks."

- "The top-performing model varies on different human alignment tasks."

- "As a more recently release model, Falcon-7B achieves a decent performance, especially on language generation tasks."

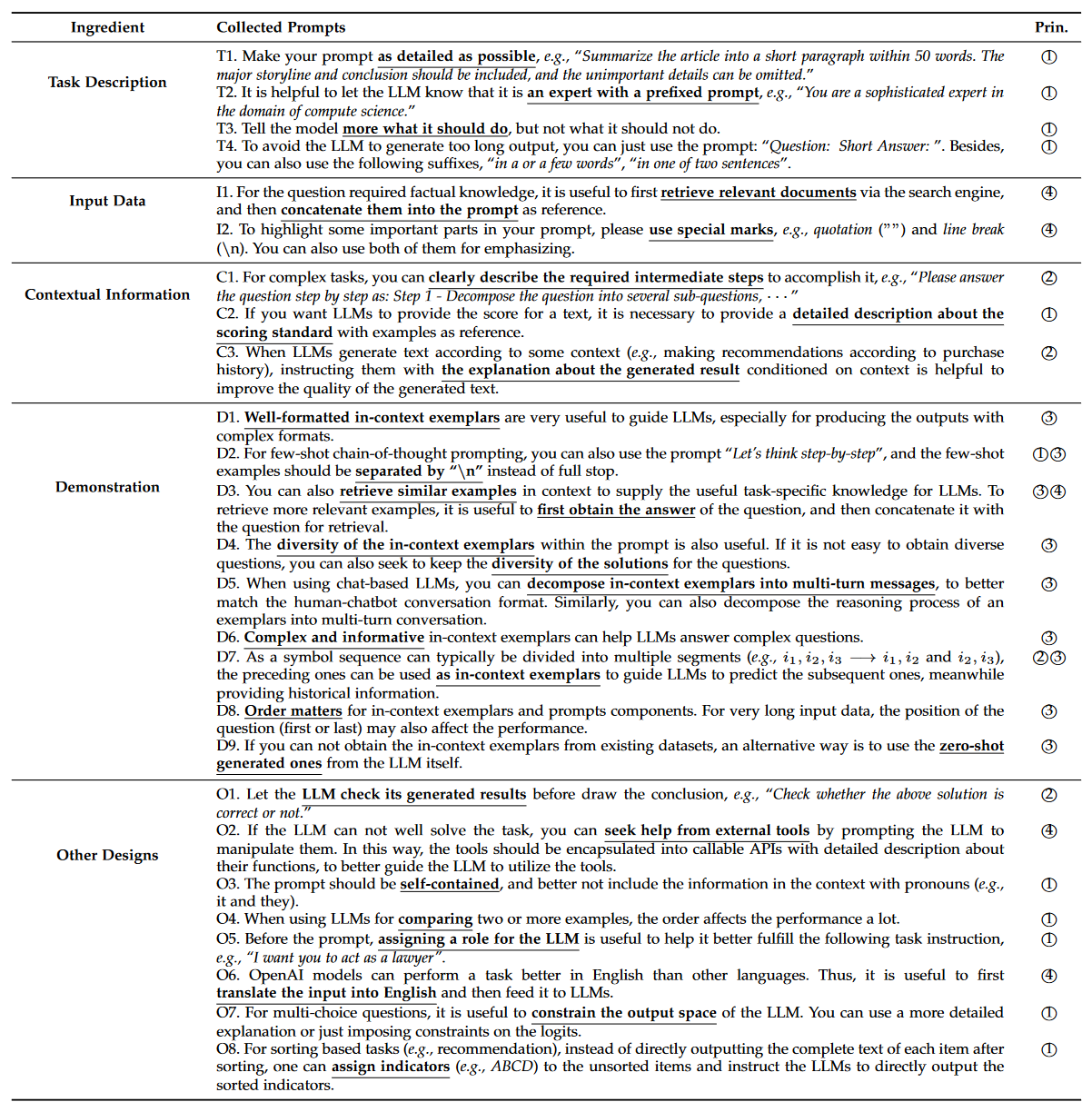

A Practical Guidebook of Prompt Design

"Prompting is the major approach to utilizing LLMs for solving various tasks." The quality of prompts largely influences the performance of LLMs.- Task description

- Input data

- Contextual information

- Prompt style

- Expressing the task goal clearly

- Decomposing into easy, detailed sub-tasks

- Providing few-shot demonstrations

- Utilizing model-friendly format

- "Carefully designed prompts can boost the zero-shot or few-shot performance of ChatGPT."

- "More complex tasks can benefit more from careful prompt engineering on ChatGPT."

- "For mathematical reasoning tasks, it is more effective to design specific prompts based on the format of programming language."

- "In knowledge utilization and complex reasoning tasks, ChatGPT with proper prompts achieves comparable performance or even outperforms the supervised baselines methods."

- "Through suitable prompt engineering, LLMs can handle some non-traditional NLP tasks."

Applications

"As LLMs are pre-trained on a mixture of source corpora, they can capture rich knowledge from large-scale pre-training data, thus having the potential to serve as domain experts or specialists for specific areas."- Healthcare. "LLMs are capable of handling a variety of healthcare tasks, e.g., biology information extraction, medical advice consultation, mental health analysis, and report simplification...However, LLMs may fabricate medical misinformation, e.g., misinterpreting medical terms and suggesting advice inconsistent with medical guidelines. In addition, it would also raise privacy concerns to upload the health information of patients into a commercial server that support the LLM."

- Education. "LLMs can achieve student-level performance on standardized tests in a variety of subjects of mathematics (e.g., physics, computer science) on both multiple-choice and free-response problems. In addition, empirical studies have shown that LLMs can serve as writing or reading assistant for education...However, the application of LLMs in education may lead to a series of practical issues, e.g., plagiarism, potential bias in AI-generated content, overreliance on LLMs, and inequitable access for non-English speaking individuals."

- Law. "LLMs exhibit powerful abilities of legal interpretation and reasoning..Despite the progress, the use of LLMs in law raises concerns about legal challenges, including copyright issues, personal information leakage, or bias and discrimination."

- Finance. "LLMs have been employed on various finance related tasks, such as numerical claim detection, financial sentiment analysis, financial named entity recognition, and financial reasoning...Nevertheless, it is imperative to consider the potential risks in the application of LLMs in finance, as the generation of inaccurate or harmful content by LLMs could have significant adverse implications for financial markets."

- Scientific research. "Prior research demonstrates the effectiveness of LLMs in handling knowledge-intensive scientific tasks (e.g., PubMedQA, BioASQ), especially for LLMs that are pre-trained on scientific-related corpora (e.g., UL2, Minerva)...LLMs hold significant potential as helpful assistants across various stages of the scientific research pipeline...Despite these advances, there is much room for improving the capacities of LLMs to serve as helpful, trustworthy scientific assistants, to both increase the quality of the generated scientific content and reduce the harmful hallucinations."

Conclusion and Future Directions

Theory and PrincipleOne of the great mysteries of LLMs is how "information is distributed, organized, and utilized through the very large, deep neural network." Scaling shows to play a critical role in unveiling sudden performance gains thanks to emergent abilities (i.e. in-context learning, instruction following and step-by-step reasoning). However, when and how these emerging abilities are obtained by LLMs remains a mystery. "Since emergent abilities bear a close analogy to phase transitions in nature, cross-discipline theories or principles (e.g., whether LLMs can be considered as some kind of complex systems) might be useful to explain and understand the behaviors of LLMs."

Model ArchitectureThe casual decoder transformer has become the "de facto architecture for building LLMs." Model capacity is enhanced by long context windows, which can cause bottlenecks thanks to its quadratic computational complexity. "It is important to investigate the effect of more efficient Transformer variants in building LLMs," e.g., sparse attention. It is also necessary to investigate extending existing architectures as a way of effectively supporting "data update and task specialization."

Model Training"In practice, it is very difficult to pre-train capable LLMs, due to the huge computation consumption and the sensitivity to data quality and training trick. Thus, it becomes particularly important to develop more systemic, economical pre-training approaches for optimizing LLMs, considering the factors of model effectiveness, efficiency optimization, and training stability."

Model Utilization "Since fine-tuning is very costly in real applications, prompting has become the prominent approach to using LLMs." Task descriptions combined with demonstration examples leverage in-context learning abilities to outperform full-data fine-tuned models in some cases. However, existing prompting approaches still have several deficiencies:- "It involves considerable human efforts in the design of prompts."

- "Some complex tasks (e.g., formal proof and numerical computation) require specific knowledge or logic rules, which may not be well expressed in natural language or demonstrated by examples."

- "Existing prompting strategies mainly focus on single-turn performance."

"LLMs exhibit a tendency to generate hallucinations, which are texts that seem plausible but may be factually incorrect. What is worse, LLMs might be elicited by intentional instructions to produce harmful, biased, or toxic texts for malicious systems, leading to the potential risks of misuse." Reinforcement learning from human feedback (RLHF) has been widely used to develop well-aligned LLMs. "However, RLHF heavily relies on high-quality human feedback data from professional labelers, making it difficult to be properly implemented in practice. Therefore, it is necessary to improve the RLHF framework for reducing the efforts of human labelers and seek a more efficient annotation approach with guaranteed data quality."

Application and Ecosystem"As LLMs have shown a strong capacity in solving various tasks, they can be applied in a broad range of real-world applications (i.e., following task-specific natural language instructions)...In a broader scope, this wave of technical innovation would lead to an ecosystem of LLM-empowered applications (e.g., the support of plugins by ChatGPT), which has a close connection with human life...It is promising to develop more smart intelligent systems (possibly with multi-modality signals) than ever. However, in this development process, AI safety should be one of the primary concerns, i.e., making AI lead to good for humanity but not bad."

Enjoy Reading This Article?

Here are some more articles you might like to read next: