Simple RAG-Based AI Chatbot

Generated with DALL-E 3

AI chatbots are great. Especially those that have (proportedly) trillions of parameters and are essentially a giant compression of the entire internet. These chatbots all pretty much have the ability to ingest your own documents as knowledge for their responses. This is great, but they usually have some sort of limit on the number of documents that you can feed them and this is likely because they are simply exploiting the large input context length of today’s models (e.g., 128k tokens or approximately 500 pages of text). However, if you have more documents then that, or, you are not interested in uploading your personal knowledge to some company’s servers, than you have to look elsewhere.

Now…this tutorial is nothing new. There are lots of tutorials out there on building very simple RAG-enabled chatbots like the one I am about to show you. So, this was more of an exercise on my part, but hopefully I can explain each of the basic components of RAG in a way that will help you grasp the concept. I will be using the LangChain API to facilitate the interaction with the documents, and the OpenAI GPT-4o-mini API as the generation model. But, after understanding the core concepts of the RAG architecture, you can use any other API to build your own chatbot.

Brief overview of RAG

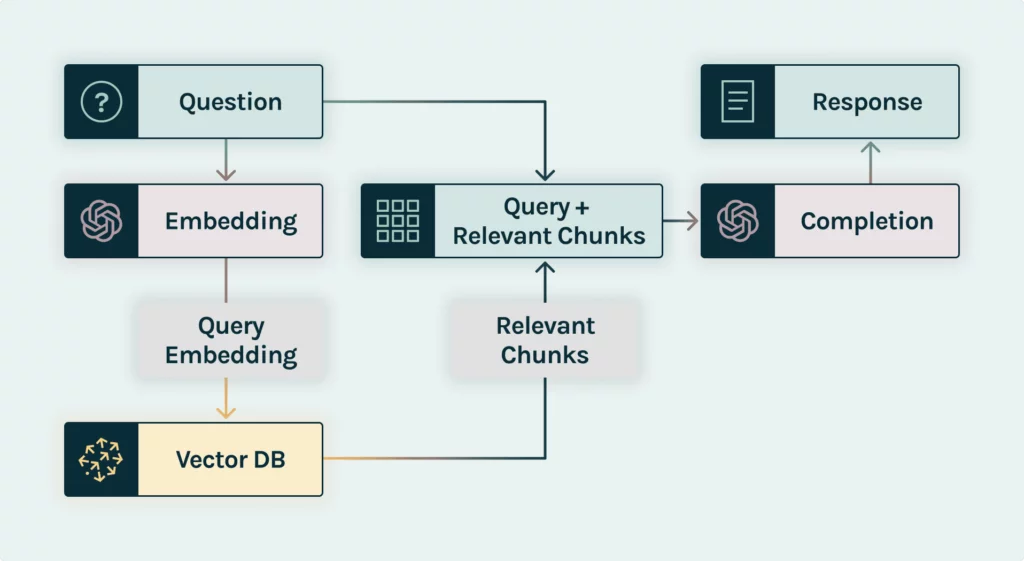

Basic retrieval-augmented generation (RAG) architecture. link

RAG is a generative AI method that involves finding relevant knowledge or documents from some source and then including that information in the prompt to the model. RAG was first introduced by Lewis et al. in 2020. Most of the time this is exclusively text documents as this is where LLMs are strong. However, recent multi-modal breakthroughs are starting to change this.

The RAG model is basically two parts:

-

Retrieval: This is responsible for finding the most relevant documents or knowledge from a source. This can be done using a variety of methods. In this tutorial we will use cosine similarity to find documents that have a similar embedding vector to the query vector. We return the top \(k\) documents to the next part.

-

Generation: This takes the top \(k\) documents and the prompt and generates a response. This can be done in a variety of ways. In this tutorial we will simply concatenate the top \(k\) documents to the prompt and send it to the model.

In the figure, you can think of the left third as the retrieval step, and the right two-thirds as the generation step.

Ingesting the knowledge

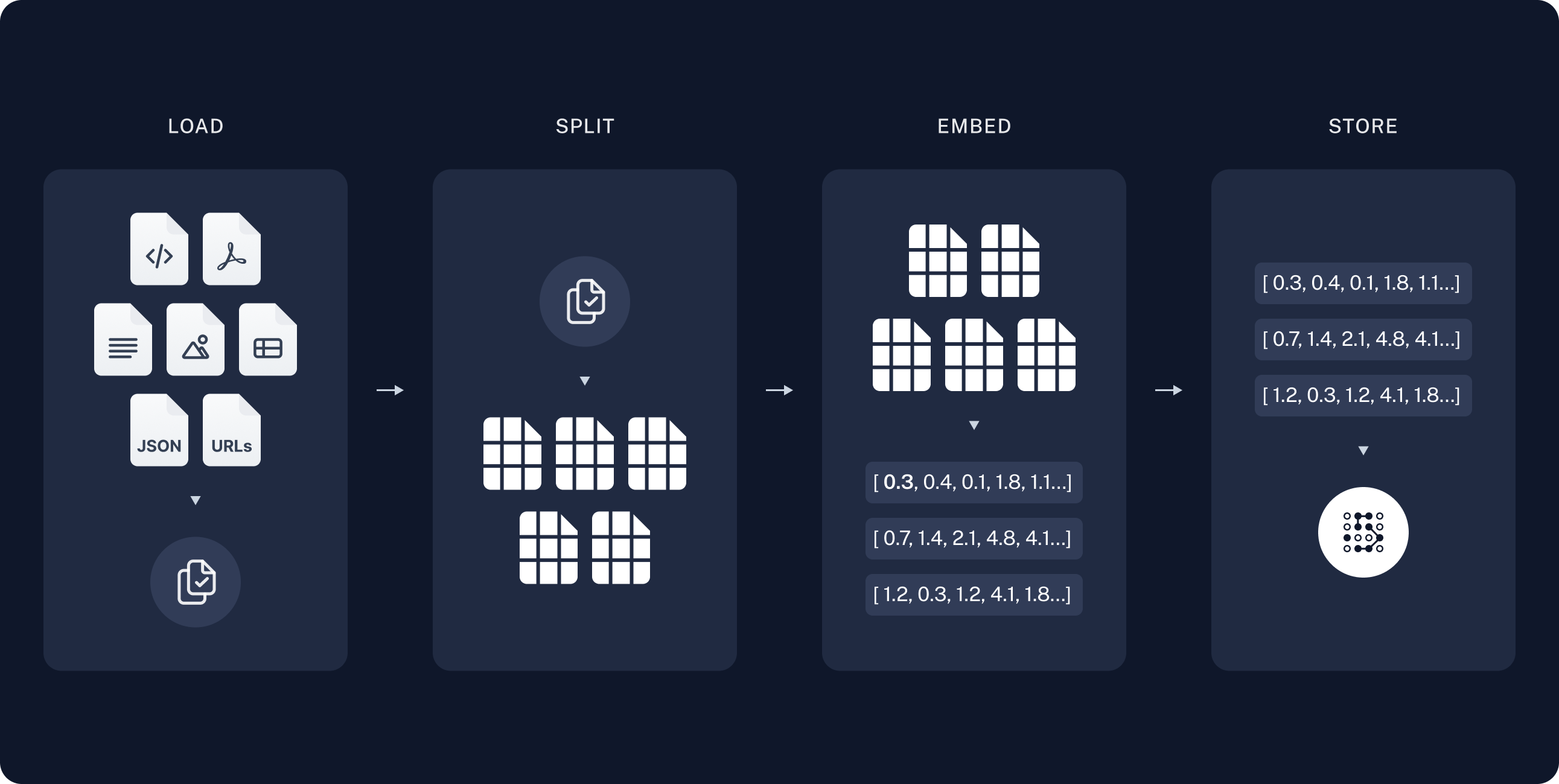

Basic retrieval-augmented generation (RAG) ingestion scheme. link

However, I would argue that the most important part of building any RAG application is how you ingest the knowledge that you want to search. This involves a few steps as highlighted by the figure above:

Loading

First we need to gather all of the raw documents from whatever source. This can be local files, a database, the web, social media, etc. For this tutorial, I manually scraped podcast transcripts from Spotify, cleaned them using BeautifulSoup, and saved the resulting text as text files. LangChain has a bunch of ingestion helpers, but you could use standard Python libraries to do this.

Let’s jump into some code for this. First let’s load all of the relevant libraries that we are going to need (OpenAI for the generation step, dotenv to load API keys from the local .env file, numpy for some number stuff, os for some file stuff, and all of the langchain things for RAG stuff).

from openai import OpenAI

from dotenv import load_dotenv, find_dotenv

from langchain_community.vectorstores import SKLearnVectorStore

from langchain_community.embeddings import SentenceTransformerEmbeddings

from langchain_community.document_loaders import TextLoader, DirectoryLoader

from langchain_text_splitters import SentenceTransformersTokenTextSplitter

from sentence_transformers import CrossEncoder

import os

import numpy as np

Now, first thing to do is to load all of the documents. I have a directory of text files, which are the cleaned transcripts, so I will take advantage of the DirectoryLoader and TextLoader classes from LangChain to load all of the documents.

loader = DirectoryLoader('transcripts/cleaned/', loader_cls=TextLoader)

docs = loader.load()

len(docs) # output: 214

What is special about the LangChain document loaders is that they also include a metadata attribute which contains a dictionary of metadata. In this case it loads some unique ID for each document along with the filepath as source. However, I would like to include the podcast episode number/title as a field as well, which will be useful in the generation step later.

for i, doc in enumerate(docs):

docs[i].metadata['episode'] = doc.metadata['source'].split('/')[-1].split('.')[0].split('_')[-1]

Here we are just extracting the episode number from the filename.

Splitting

Now that we have all of the documents loaded, we need to split them into chunks that would be more easily digestible by the language model. This is the first point at which you need to make a decision. How do you want to split the documents? You could split them by number of words, sentences, or at paragraphs. For me, I think it is important to align our content with the models that we use. I decided to split the documents by number of tokens, since the embedding models that we will use next are trained on a fixed length of tokenized text.

It is here that you can also decide how much your chunks overlap. For example, you could have chunks that are 512 tokens long and overlap by 256 tokens. This may allow you to have more context in the retrieval step. For simplicity I will just split the documents into 512 token chunks with 0 overlap.

token_splitter = SentenceTransformersTokenTextSplitter(chunk_overlap=0, tokens_per_chunk=512, model_name='BAAI/bge-large-en-v1.5')

split_docs = token_splitter.split_documents(docs)

len(split_docs) # output: 9251

Interestingly, we have to provide a model in this splitter, even though we are not using it to generate embeddings. This is because the splitter uses the tokenizer from the model to split the text into chunks. This is important because we want to make sure that the chunks are tokenized in the same way as the embeddings that we will use in the retrieval step.

From the 214 original documents that we had, we now have 9251 chunks after splitting. With some back of the envelope math, we can estimate that we have about 43 chunks per document and our corpus is about \(9251 \times 512 \approx 4.7\) million tokens! For reference, the max input token length for GPT-4o at the time of writing this was 128k tokens, 200k tokens for Claude 3.5 Sonnet, and 1M tokens for Gemini 1.5 Pro. What this means is our corpus is large enough that we can’t just dump everything into a single query. Our efforts to use RAG are not in vain.

Embedding & Storage

Now this is where some natural language processing (NLP) magic starts to come in. We need to convert all of these text chunks into numbers, which is what the AI and language models understand. What’s more, it would be cool if we could convert it into numbers that also have some sort of meaning. This is where embeddings come in.

What we will do is use an embedding model (BAAI/bge-large-en-v1.5 in our case because it is very good model that I can run on my GPU) to convert all of the text chunks into vectors (a fixed length array of numbers, essentially). What is cool about these embedding models is that they were trained on massive amounts to text to create similar vectors for similar text. So if two pieces of text are similar, then the difference or distance between their vectors should be small and we can assume they contain the same information. This is the crux of the retrieval step in RAG. We will exploit this to find the most similar text chunks from our library with our search query. There are lots of tutorials out there on embeddings, but one I really like is from Lena Voita’s NLP Course For You (it helps to have a little math background).

Let’s implement this step and convert all of our text chunks into embeddings. While we are at it, we need somewhere to store all of these vectors. In the RAG world, these are called vector databases or vector stores. There are tons of these out there that can be hosted in the cloud or on your computer. To keep things very simple, I am going to use the SKLearnVectorStore from LangChain, which is just a simple wrapper around the sklearn library. There may be more convenient and efficient ways to store these vectors out there which can have a big impact on retrieval time.

ef = SentenceTransformerEmbeddings(model_name='BAAI/bge-large-en-v1.5', model_kwargs={'device': 'cuda'})

persist_path = os.path.join('storage', 'swapbot.parquet')

vector_store = SKLearnVectorStore.from_documents(

documents=split_docs,

embedding=ef,

persist_path=persist_path,

serializer='parquet'

)

vector_store.persist()

This step will take a while, since we are converting 4.7 million tokens into vectors. The persist method will save the vectors to disk so that we can load them later. For me, using an NVIDIA RTX 3090 Ti, this step took about 3 minutes, but I halted this process on a base level M2 Macbook Air after 22 minutes.

Now we do the thing!

OK! Now that we have a vector database filled with all of the text chunk representations, we can start to perform the retrieval. As mentioned earlier, we are going to exploit the vector representations of the text chunks and the query to find the most similar documents to the query using some math. Luckily, we don’t have to perform any of the math ourselves, as we can use the builtin LangChain functions.

Since this is a running training podcast, let’s ask it about a very specific running training method called “strides.”

query = 'What are strides and why should I do them?'

retrieved = vector_store.similarity_search(query, k=10)

Now let’s take a look at a few of the 10 documents that were retrieved.

Document(metadata={'id': '6a982e9d-a5d5-4a55-a46e-bf385bae9466', 'episode': '149', 'source': 'transcripts/cleaned/episode_149.txt'}, page_content="strides, do them as repeats, do them in a focused way. and i think any listeners out there will see huge benefits...

Document(metadata={'id': '1f7ea6da-cfa4-412b-a6b2-573b64ba0f03', 'episode': '226', 'source': 'transcripts/cleaned/episode_226.txt'}, page_content="of you running strides are usually on slight downhills. but that being said, they're fast, but they're also smooth...

Document(metadata={'id': 'af168965-84ad-4e54-accd-1705590bcb82', 'episode': '162', 'source': 'transcripts/cleaned/episode_162.txt'}, page_content=". that push can be at times. so like on a 20 or 30, let's say a 32nd hill stride, like i often do, hit 345 great adjusted pace or 330 great adjusted pace...

You can see that the retrieved documents are actually quite relevant to the query. This great. Now, just to be sure that we are getting the best documents at the top of the ranking, let’s perform an extra step.

Reranking

This can be a computationally expensive step, but can ensure that the documents you receive are the most relevant to your query. If you are running all of you models on a GPU, or a cluster of GPUs, then this step should hardly be noticeable. If you are running on a CPU, then this step could take a while.

Let’s use the reranking model BAAI/bge-reranker-large to rerank the documents, extract the top 2 and then put them into a nice prompt that we can use to send to our language model.

reranker = CrossEncoder('BAAI/bge-reranker-large')

# Retrieval with reranking

tops = []

prompt = ''

prompt += f'Query: {query}\n'

pairs = [[query, doc.page_content] for doc in retrieved]

scores = reranker.predict(pairs)

top_2 = list(np.argsort(scores)[::-1][:2])

tops.append(top_2)

prompt += f"Doc1: {retrieved[top_2[0]].page_content}\n"

prompt += f"Metadata1: {retrieved[top_2[0]].metadata}\n"

prompt += f"Doc2: {retrieved[top_2[1]].page_content}\n"

prompt += f"Metadata2: {retrieved[top_2[1]].metadata}\n\n"

print(prompt)

Here is the output

Query: What are strides and why should I do them?

Doc1: only will your physiology change. but the way you think about yourself will too. so try to remember, remember us out there when you're running, like when it gets hard here, david and megan saying you're fucking awesome. and then when you stop be like, hear us and saying you're fucking awesome and that that's not going to change whether you stop and push through or or you push through that people are going to be like our that was was that david? i'm megan or was that cricket singing? definitely crickets. they're coming from within. okay, next question is on strides. forgive me if this has been addressed before, but i have a question about strides, how important is it to do them back - to - back? see, i aim to do for by 30 - second host rides on a 5 - mile. easy, run day, is it just as effective to pepper? then in whenever i hit a hill over the last two ish miles of my route or is it more beneficial to stop and go up and down the same hill four times mentally? it's easier for me to incorporate strides hill or flat this way this way so it continuously but or over the last two miles, but a stride should be done back - to - back for their physical benefits. i try to change my habits with them. this is a great one. i actually think it's a helpful primer for us to take a step back first. yeah, we like, what is a stride? so we did our interview with alex honnold and he flipped the interview on us. he came with a bunch of questions and his first one is, was his. first question was like, what the fuck is a strong? and it was really helpful for us as running. coach has to be like oh we should probably talk about this a little more. yes. eye - opening that like the smartest like one of the best athletes in human history is like what is the thing that you talked about almost every episode? okay. so a few principles first, a stride is an acceleration 30 seconds or less. not more than that on flats were up hills progressing to the fastest pace you can go while using long distance form. that's the terminology we use. essentially, this isn't a full sprint, where you're pumping your arms really hard. this is something where you're moving efficiently and eventually, you'll be able to get that really fast. something that

Metadata1: {'id': '531d8b87-8891-429d-af0d-17afb6a0ef1c', 'episode': '149', 'source': 'transcripts/cleaned/episode_149.txt'}

Doc2: of you running strides are usually on slight downhills. but that being said, they're fast, but they're also smooth. like, you know, you have worked your biomechanical output, you've worked this overtime to the point that you are running fast at smooth basis. and i think it that gets back to the crux of the question. like it should be a smooth form. like you don't look like you're sprinting, don't look like you're, you know, out of nfl combine going out and, you know, doing 40. and it's it's cool to see that like long distance form. yeah, and anyone who's ever sprinted before knows the difference here in that when you sprint, you're getting off the line as fast as you can, you're going into a dry phase and then you're pumping your arms and really going off your toes. in the form, it's totally different. a stride is your long distance form. so the correlation often ends up being to 800 meter or mile race pace for most people. and if you see those athletes racing, they, they look very relaxed. they're just moving fast with that type of effort. and there's a lot of reasons for this. i think the main 1 is just health because you want to make sure that you're not pulling muscles. and sprinters pull their hamstrings all the time, even with a million different rehab exercises before training. so you need to be careful of that. but # 2 is you actually want to work your slow twitch muscle fibers. and my favorite study of all time was in physiology reports in 2018. and it did a stride intervention for four to four, four to six weeks or something like that. and it found that the changes essentially made the slow twitch muscle fibers themselves stiffer. and that's kind of what we're trying to get out here. strides are so magic because they distribute to every pace because you're actually using the same muscle fibers you use in a marathon or in 100 miles. and yeah, so the effort level is just the fastest pace you can go while maintaining long distance form. ease into it then maybe hit close to your top speed and then ease back if you don't want to pull a muscle. and as you're talking about hitting close to your top speed, i think envision it kind of like the kick at the end of a 1500

Metadata2: {'id': '1f7ea6da-cfa4-412b-a6b2-573b64ba0f03', 'episode': '226', 'source': 'transcripts/cleaned/episode_226.txt'}

Great! Now that we have that we can send this prompt to our language model to generate a response. For the sake of time, simplicity, and cost (since we are providing it with all the knowledge), we will use the OpenAI API with the GPT-4o-mini model to generate a response.

systemMsg = "You are an intelligent and friendly assistant specialized in retrieving information from podcast transcripts, specifically from 'Some Work, All Play.' This podcast covers five to ten topics per episode, including running, training, races, scientific studies, pop culture, and more, all delivered with love, enthusiasm, and a strong science foundation. The hosts, Megan Roche, M.D., Ph.D., and David Roche, bring engaging and heartfelt discussions. Your goal is to provide accurate and relevant answers based on user questions, referencing or summarizing details from the transcripts. When possible, include context about the topics discussed, cite timestamps for detailed exploration, and align your tone with the warmth and energy of the podcast. If a question cannot be directly answered, explain this clearly and offer alternative suggestions or areas to explore."

response = client.chat.completions.create(

model = 'gpt-4o-mini',

messages = [{'role': 'system', 'content': systemMsg},

{'role': 'user', 'content': prompt}

]

)

And here is the response generated by the model

Sample Response

Strides are a fantastic tool in a runner’s training toolbox, and there’s a lot of useful information about them discussed in the podcast!

What are strides? Strides are brief accelerations, typically lasting about 30 seconds or less, where you run at a fast pace while maintaining your long-distance running form. They’re not full sprints characterized by intense arm pumping or extreme effort; instead, strides should feel smooth and controlled, almost like a relaxed version of sprinting (Episode 149, timestamp 00:45).

Why should you do them?

-

Physiological Benefits: Strides can help improve the efficiency of your running as they engage your slow-twitch muscle fibers, which are essential for endurance. Interestingly, a study highlighted in the podcast noted that regular strides can make these muscle fibers stiffer, enhancing their performance over time (Episode 226, timestamp 02:30).

-

Form and Speed: By practicing strides, you develop your ability to maintain good running form at faster paces. This becomes particularly beneficial when you need to kick at the end of a race or when you’re navigating varied terrain (Episode 226, timestamp 03:15).

-

Injury Prevention: Engaging in strides allows you to work on speed without the same risks associated with all-out sprints, like pulling muscles (Episode 226, timestamp 02:10).

-

Smooth Transition: Incorporating strides into your runs can ease your body into faster paces, contributing to overall speed development (Episode 226, timestamp 03:30).

How to do them? Strides can be integrated easily into your runs. You can execute them on flat terrain or slight downhills, gradually working up to your top speed while ensuring that you keep a relaxed and smooth form (Episode 149, timestamp 01:00).

In summary, incorporating strides into your training can significantly enhance both your speed and efficiency as a runner, making them a valuable practice to include in your routine!

Not bad! If you look back at the information that we provided it seems like the model did a good job of summarizing the key points and providing a coherent response. However, there are some areas that we can improve. For example, my system prompt mentions timestamps which the model clearly listened to, but no timestamps were provided. This is a hallucination that should be addressed either by changing the system prompt or providing timestamps.

Moving forward

This was a very simple example of how we can use a RAG-enabled chatbot to search through some knowledge and generate a relevant response. There are lots of ways that we can improve this though:

-

Better Prompting: The system prompt can be improved to provide more context and information to the model. This can help guide the model to generate more accurate and relevant responses.

-

Providing More Documents: We only used two documents after reranking in this example. We could test to see what number of documents provides excellent responses without flooding it with too much information.

-

UI: This is just in a python script, but we could build a simple UI to make it more user-friendly.

-

Error Handling: What if the model’s response is totally off? We could build in some error handling to rerun the model or provide a different response.

-

Agents: We could create a specific chatbot agents or multiple agents that could handle a conversation more effectively. This could mean having one agent that is responsible for searching for information and another that is responsible for generating responses. This could also mean having an agent that is responsible for error handling. One foreseeable benefit here is if a user wants to continue a conversation about information that was already retrieved, the agent could decide that there is no need for the retrieval step and skip straight to the response generation step. This sort of thing starts to introduce planning and reasoning into the chatbot.

And I’m sure there is a ton more that I am missing as well. Let me know if you have any ideas or suggestions!

Enjoy Reading This Article?

Here are some more articles you might like to read next: